Introduction

Cloud-native technologies and DevOps are a natural pair. Containers, microservices, and declarative automation give teams the speed and resilience DevOps promises while platforms like Kubernetes, Terraform, and modern CI/CD make that repeatable at scale. In this guide, you’ll learn the core patterns, tools, benefits, and trade-offs of cloud-native DevOps, with real-world examples and a practical checklist you can apply today.

Why Cloud-Native Supercharges DevOps

- Faster delivery: Smaller services + CI/CD = more frequent, safer releases

- Elastic scale: Autoscale to traffic, pay for what you use

- Higher resilience: Fault isolation prevents cascading failures

- Portability: Run across clouds, avoid lock-in

- Observability: Metrics, logs, traces baked in for quick MTTR

Understanding Cloud Native Technologies

Cloud-native technologies represent a modern approach to building and running applications that fully exploit the advantages of cloud computing. These technologies have evolved from traditional monolithic architectures to more modular and scalable systems. The term cloud-native itself refers to applications designed specifically to operate in the cloud environment, using tools and techniques that enhance agility, resilience, and portability.

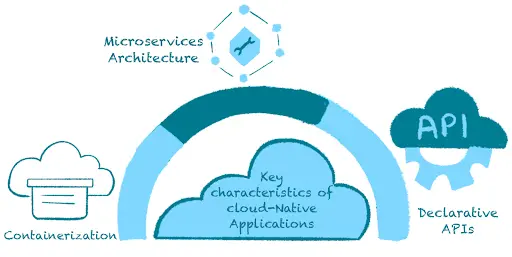

Key Characteristics of Cloud-Native Applications (CNAs)

Containerization:

Containers package applications and their dependencies into a single, portable unit that can run consistently across various environments. This ensures that the application behaves the same regardless of where it is deployed, be it a developer’s laptop, a private data centre, or a public cloud.

Microservices Architecture:

Unlike traditional monolithic applications, which are built as a single, interconnected unit, microservices architecture breaks down applications into smaller, independent services. Each service focuses on a specific business function and communicates with others through APIs. This allows for greater flexibility, as individual services can be developed, deployed, and scaled independently.

Declarative APIs:

Declarative APIs specify the desired state of the system rather than the steps to achieve that state. This approach simplifies the management and orchestration of cloud-native applications, making it easier to automate tasks such as deployment, scaling, and recovery.

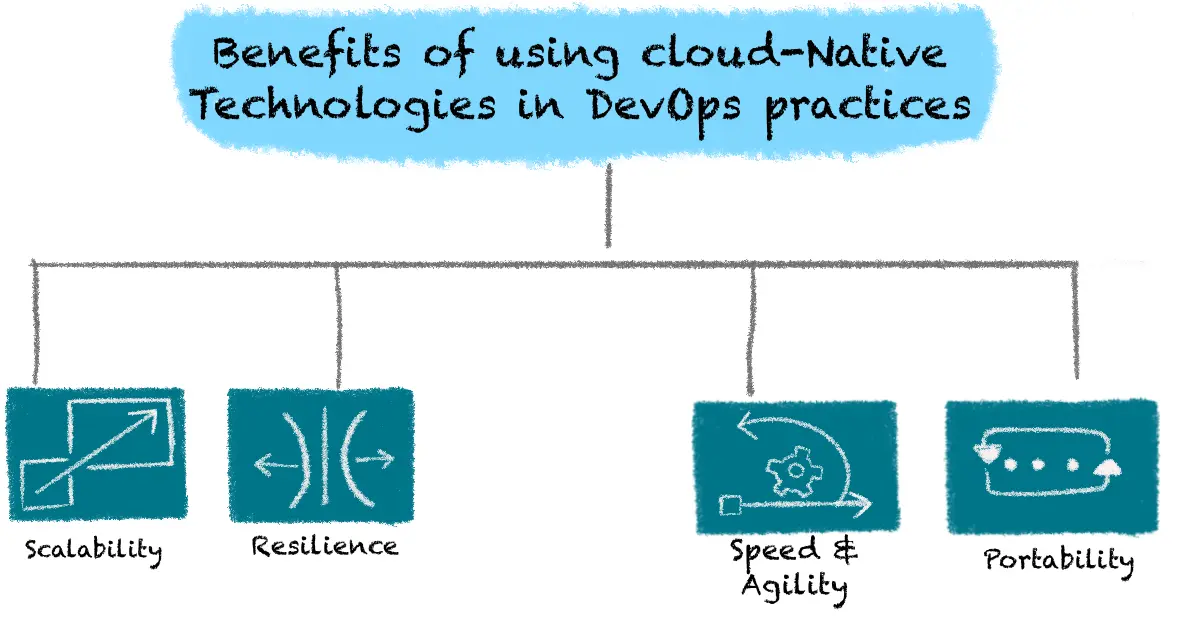

Benefits of Using Cloud-Native Technologies in DevOps Practices

Scalability:

Cloud-native applications can dynamically scale resources up or down based on demand, ensuring optimal performance and cost-efficiency. This is particularly valuable in DevOps environments where workload demands can be unpredictable.

Resilience:

The modular nature of cloud-native applications means that individual components can fail without bringing down the entire system. This enhances the overall resilience and reliability of the application, aligning with DevOps principles of continuous availability and rapid recovery.

Speed and Agility:

By leveraging containerization and microservices, development and deployment cycles are significantly shortened. Teams can release updates and new features more frequently and with less risk, fostering a culture of continuous improvement and innovation.

Portability:

Cloud-native applications can run on any cloud provider’s infrastructure, providing flexibility and avoiding vendor lock-in. This aligns with the DevOps goal of creating flexible, adaptable systems.

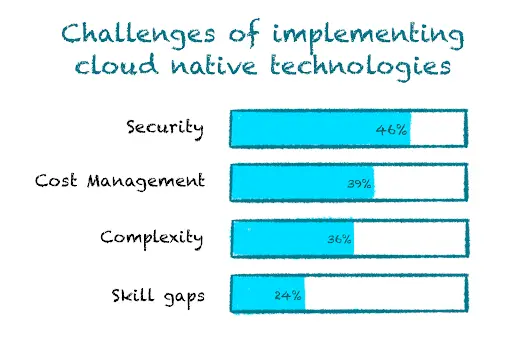

Challenges of Implementing Cloud-Native Technologies

Complexity:

Moving to a cloud-native architecture involves significant changes to development and operational processes. The complexity of managing multiple microservices and containers can be daunting, especially for teams accustomed to monolithic applications.

Skill Gaps:

Adopting cloud-native technologies requires specialized knowledge and expertise. Organizations may face challenges in upskilling their existing workforce or recruiting talent with the necessary skills.

Security:

While cloud-native technologies offer many benefits, they also introduce new security challenges. Ensuring the security of containerized applications, managing API security, and protecting data in a distributed environment require robust security practices and tools.

Cost Management:

Although cloud-native technologies can lead to cost savings through efficient resource utilization, the initial setup and ongoing management can be expensive. Organizations need to plan and monitor their cloud expenditures to avoid overspending carefully.

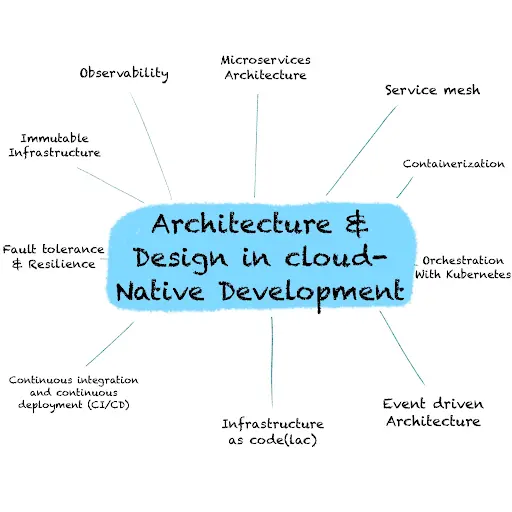

Architecture and Design Patterns in Cloud-Native Development

Cloud-native development emphasizes scalability, resilience, and agility. Achieving these goals requires careful consideration of architecture and design patterns.

Architecture and Design in Cloud-Native Development

Let's explore some key patterns:

- Microservices Architecture: The microservices architecture is fundamental to cloud-native development. It involves breaking down applications into small, independently deployable services. This allows for easier scaling, maintenance, and development of each service.

- Service Mesh: A service mesh like Istio or Linkerd is used to manage the communication between microservices. It provides features such as load balancing, service discovery, and encryption, enhancing the reliability and security of microservices-based applications.

- Containerization: Containers, particularly Docker, are used to package and deploy microservices. Containers provide lightweight, isolated environments for running applications, making them portable and scalable.

- Orchestration with Kubernetes: Kubernetes is used to orchestrate the deployment, scaling, and management of containers. It provides features like auto-scaling, rolling updates, and service discovery, simplifying the management of containerized applications.

- Event-Driven Architecture: Cloud-native applications often use an event-driven architecture to decouple components and enable asynchronous communication. This allows for better scalability and resilience.

- Infrastructure as Code (IaC): IaC is used to define and manage infrastructure using code. This allows for the automated provisioning and configuration of infrastructure, making deployments more reliable and repeatable.

- Continuous Integration and Continuous Deployment (CI/CD): CI/CD pipelines are used to automate the building, testing, and deployment of applications. This allows for faster and more reliable delivery of features and updates.

- Fault Tolerance and Resilience: Cloud-native applications are designed to be fault-tolerant and resilient. This involves strategies such as redundancy, circuit breakers, and graceful degradation to ensure that the application remains available and responsive in the face of failures.

- Immutable Infrastructure: Immutable infrastructure involves treating infrastructure as disposable and not making any changes to it once it's deployed. This reduces the risk of configuration drift and makes deployments more predictable.

- Observability: Observability is crucial in cloud-native development. It involves monitoring, logging, and tracing to gain insights into the behaviour of applications and infrastructure, helping to identify and troubleshoot issues quickly.

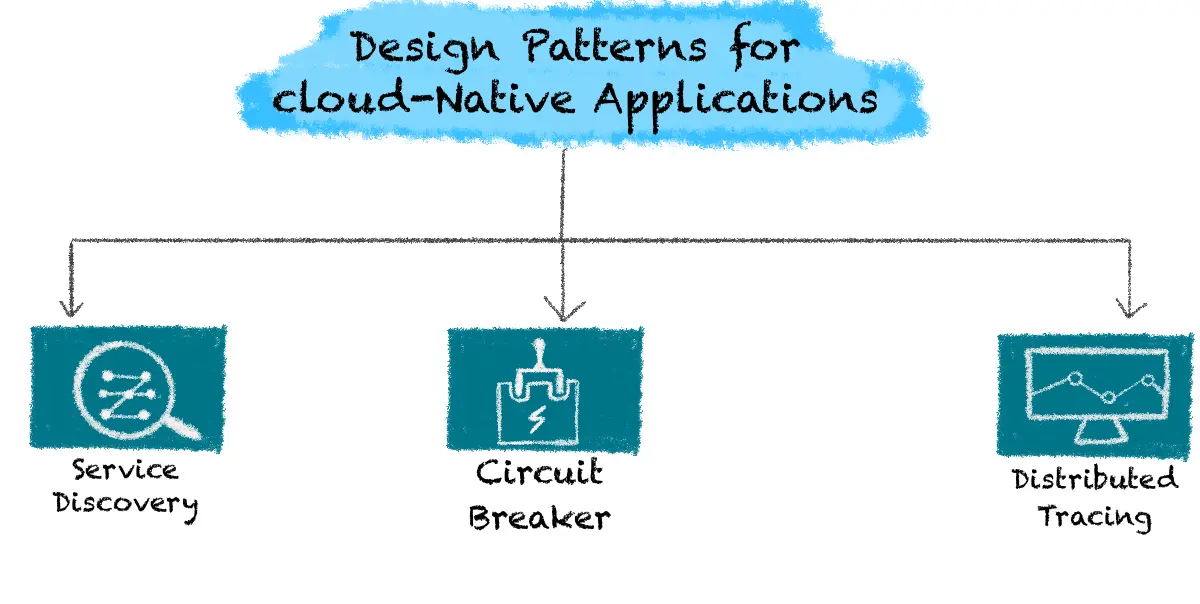

Design Patterns for Cloud-Native Applications

Service Discovery:

In a microservices architecture, services need to locate and communicate with each other. Service discovery mechanisms automatically detect services in the network, allowing them to find and interact with each other without hardcoded endpoints. Tools like Consul, Eureka, and Kubernetes' built-in service discovery facilitate this process.

Circuit Breakers:

Circuit breakers are a design pattern used to prevent cascading failures in distributed systems. If a service is experiencing failures or high latency, the circuit breaker trips, preventing further calls to the failing service until it recovers. This helps maintain the stability of the overall system. Netflix’s Hystrix and Resilience4j are popular implementations of this pattern.

Distributed Tracing:

Distributed tracing allows tracking requests as they flow through various services in a microservices architecture. This provides visibility into the system's behavior and helps diagnose issues across multiple services. Tools like Jaeger and Zipkin are commonly used for distributed tracing.

Real-World Examples of Cloud-Native Architectures

Netflix:

Netflix is a prime example of leveraging cloud-native technologies. They use a microservices architecture running on AWS, with each service responsible for a specific aspect of their platform. They employ advanced design patterns like circuit breakers, service discovery, and distributed tracing to ensure their services are scalable and resilient.

Uber:

Uber’s architecture is built on cloud-native principles, utilizing microservices, containers, and a robust observability stack. This allows them to handle massive amounts of data and requests efficiently, ensuring reliability and scalability even during peak usage times.

How Cloud-Native Technologies Powers Modern Softwares

Faster Development Cycles:

Cloud-native technologies enable continuous integration and continuous deployment (CI/CD) pipelines, which automate the testing and deployment processes. This reduces the time needed to deliver new features and updates, allowing development teams to iterate quickly and respond to user feedback more effectively.

Improved Scalability:

By leveraging container orchestration platforms like Kubernetes, cloud-native applications can automatically scale in response to changing demands. This dynamic scaling ensures that resources are used efficiently, providing a seamless user experience even during traffic surges.

Enhanced Reliability:

The resilience of cloud-native architectures, supported by design patterns like circuit breakers and service discovery, ensures that applications can withstand failures and continue operating smoothly. Additionally, the observability tools integrated into cloud-native environments provide real-time insights into system health, allowing for proactive maintenance and quick issue resolution.

Through these principles and design patterns, cloud-native technologies empower organizations to build applications that are not only more agile and scalable but also more robust and reliable. These capabilities align perfectly with DevOps practices, driving innovation and operational excellence.

By incorporating Atmosly into your cloud-native strategy, you can leverage their platform to enhance these benefits even further. Atmosly provides tools and services designed to streamline cloud-native development, improve observability, and automate key aspects of your DevOps workflows, ensuring your applications are always performing at their best.

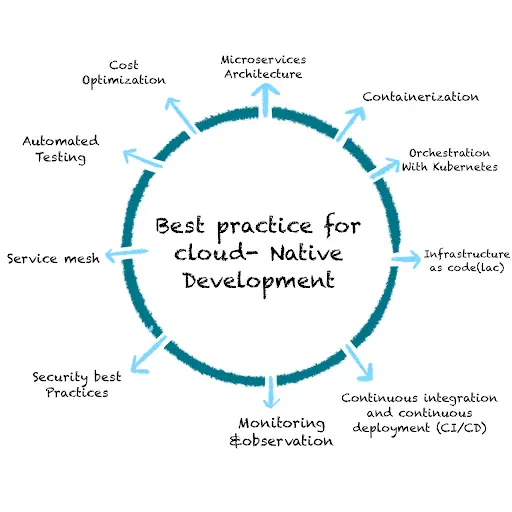

Best Practices for Cloud-Native Development

Top Best Practices in Cloud-Native Development

Cloud-native development is not just about leveraging cloud technologies; it's also about adopting best practices that maximize the benefits of cloud computing. Here are the top best practices to follow:

- Microservices Architecture: Embrace the microservices architecture to build applications as a set of small, loosely coupled services. This approach enables independent deployment, scaling, and maintenance of each service, leading to better agility and scalability.

- Containerization: Use containers to package and deploy your applications and their dependencies. Containers provide a lightweight, consistent runtime environment that can be easily scaled and managed across different environments.

- Orchestration with Kubernetes: Kubernetes is the de facto standard for orchestrating containerized applications. Use Kubernetes to automate the deployment, scaling, and management of your containers, ensuring high availability and resilience.

- Infrastructure as Code (IaC): Adopt IaC practices to define and manage your infrastructure using code. This approach allows you to provision and configure infrastructure resources programmatically, leading to more reliable and reproducible deployments.

- Continuous Integration and Continuous Deployment (CI/CD): Implement CI/CD pipelines to automate the building, testing, and deployment of your applications. This practice helps in delivering changes more frequently and reliably.

- Monitoring and Observability: Use tools like Prometheus and Grafana to monitor the health and performance of your applications. Implement logging and tracing to gain visibility into the behavior of your services.

- Security Best Practices: Implement security best practices such as least privilege access, encryption at rest and in transit, and regular security audits. Use tools like Vault for managing secrets and sensitive data.

- Service Mesh: Consider using a service mesh like Istio to handle service-to-service communication, traffic management, and security policies. Service meshes can help in improving the reliability and security of your microservices architecture.

- Automated Testing: Implement automated testing at all levels of your application, including unit, integration, and end-to-end tests. This practice ensures the reliability and correctness of your application as it evolves.

- Cost Optimization: Optimize your cloud infrastructure costs by using auto-scaling, right-sizing your resources, and leveraging spot instances or reserved instances where applicable.

Tools and Technologies

Below are Popular Cloud-Native Tools and Technologies:

Kubernetes:

Orchestrating Containerized Applications Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Developed by Google, Kubernetes has become the de facto standard for container orchestration in the cloud-native ecosystem.

Key Features:

- Automated deployment and scaling of containerized applications.

- Seamless management of containerized workloads and services.

- Load balancing, self-healing, and rolling updates for applications.

Companies Leveraging Kubernetes:

- Google: Google extensively uses Kubernetes to manage its containerized services.

- Spotify: Spotify relies on Kubernetes to scale its microservices architecture.

Docker:

Containerization for Seamless Application Deployment Docker has revolutionized the way applications are packaged and deployed, introducing the concept of containerization. It allows developers to package an application and its dependencies into a single container, ensuring consistency across different environments.

Key Features:

- Lightweight and portable containers for applications.

- Efficient utilization of resources, enabling faster deployment.

- Compatibility across different operating systems and cloud providers.

Companies Leveraging Docker:

- eBay: eBay uses Docker to facilitate consistent and reliable application deployment.

- PayPal: PayPal relies on Docker to streamline its development and deployment workflows.

Helm:

Simplifying Kubernetes Deployments Helm is a package manager for Kubernetes applications, streamlining the deployment and management of applications on a Kubernetes cluster. It provides a way to define, install, and upgrade even the most complex Kubernetes applications.

Key Features:

- Templating system for defining Kubernetes manifests.

- Easy installation and versioning of Kubernetes applications.

- Shareable charts for consistent application deployment.

Companies Leveraging Helm:

- Microsoft: Helm is widely used within Microsoft Azure Kubernetes Service (AKS) for managing application deployments.

- Ticketmaster: Ticketmaster utilizes Helm to simplify the deployment of its microservices architecture.

Prometheus:

Monitoring and Alerting for Cloud-Native Applications Prometheus is an open-source monitoring and alerting toolkit designed for cloud-native applications. It collects metrics from configured targets and stores them for analysis, providing real-time insights into the health and performance of applications.

Key Features:

- Multi-dimensional data model for efficient metric collection.

- Powerful query language for data analysis and visualization.

- Dynamic alerting based on configurable thresholds.

Companies Leveraging Prometheus:

- SoundCloud: SoundCloud relies on Prometheus for monitoring its distributed systems and ensuring optimal performance.

- DigitalOcean: DigitalOcean uses Prometheus to monitor and maintain the health of its services.

Istio:

Service Mesh for Microservices Istio is an open-source service mesh platform that facilitates communication, management, and security within microservices architectures. It enhances the visibility and control of traffic between services.

Key Features:

- Traffic management and load balancing for microservices.

- Secure service-to-service communication with built-in authentication.

- Observability through metrics, logs, and traces.

Companies Leveraging Istio:

- eBay: Istio plays a crucial role in eBay's microservices architecture, providing enhanced control and security for service communication.

- Pinterest: Pinterest utilizes Istio to manage and secure its microservices-based infrastructure.

Jenkins:

Automation for Continuous Integration and Continuous Deployment (CI/CD) Jenkins is an open-source automation server that facilitates continuous integration and continuous deployment (CI/CD) pipelines. It automates the building, testing, and deployment of applications, streamlining the development lifecycle.

Key Features:

- Extensive plugin support for integration with various tools and technologies.

- Distributed builds for scalability and speed.

- Pipeline as code for defining and managing CI/CD workflows.

Companies Leveraging Jenkins:

- Netflix: Jenkins is a critical component in Netflix's CI/CD pipelines, ensuring the rapid and reliable delivery of its streaming services.

- Airbnb: Airbnb relies on Jenkins for automating its deployment processes and maintaining a robust CI/CD infrastructure.

OpenShift:

Kubernetes-based Container Platform OpenShift, developed by Red Hat, is a Kubernetes-based container platform that simplifies the deployment and management of containerized applications. It adds developer and operational tools on top of Kubernetes to streamline the entire application lifecycle.

Key Features:

- Developer-friendly tools for building and deploying applications.

- Integrated container registry and image management.

- Unified console for managing Kubernetes resources.

Companies Leveraging OpenShift:

- BMW: OpenShift is an integral part of BMW's digital transformation, enabling efficient container orchestration and application deployment.

- ANZ Banking Group: ANZ utilizes OpenShift for building and managing its containerized applications securely.

Terraform:

Infrastructure as Code (IaC) Terraform is an open-source infrastructure as code (IaC) tool that enables developers to define and provision infrastructure using a declarative configuration language. It supports multiple cloud providers, making it a versatile choice for managing infrastructure.

Key Features:

- Declarative configuration for defining infrastructure.

- Multi-cloud support for provisioning resources across different providers.

- Plan and apply workflow for managing infrastructure changes.

Companies Leveraging Terraform:

- Slack: Terraform is instrumental in Slack's infrastructure provisioning, allowing the team to manage resources across various cloud providers seamlessly.

- HashiCorp: HashiCorp uses Terraform for managing and provisioning infrastructure for its cloud services.

Vault:

Secrets Management and Data Protection Vault, developed by HashiCorp, is an open-source tool for managing secrets and protecting sensitive data. It provides a secure and centralized way to manage access to tokens, passwords, certificates, and encryption keys.

Key Features:

- Centralized secrets management with dynamic secret generation.

- Data encryption and tokenization for enhanced security.

- Auditing and access controls for compliance.

Companies Leveraging Vault:

- Adobe: Vault is a critical component in Adobe's security infrastructure, ensuring the secure management of secrets and sensitive data.

- Splunk: Splunk uses Vault to enhance the security of its infrastructure and protect sensitive information.

Knative:

Building and Running Serverless Applications Knative is an open-source platform that extends Kubernetes to enable serverless application development. It provides a set of components for building, deploying, and managing serverless workloads on Kubernetes.

Key Features:

- Autoscaling and event-driven architecture for serverless applications.

- Seamless integration with Kubernetes and other cloud-native tools.

- Simplified development and deployment of serverless functions.

Companies Leveraging Knative:

- IBM: Knative is utilized by IBM for building and deploying serverless applications on Kubernetes, enhancing scalability and efficiency.

- GitHub: GitHub incorporates Knative for certain serverless workflows, optimizing resource utilization and response times. Engineering Platforms and Cloud-Native Practices

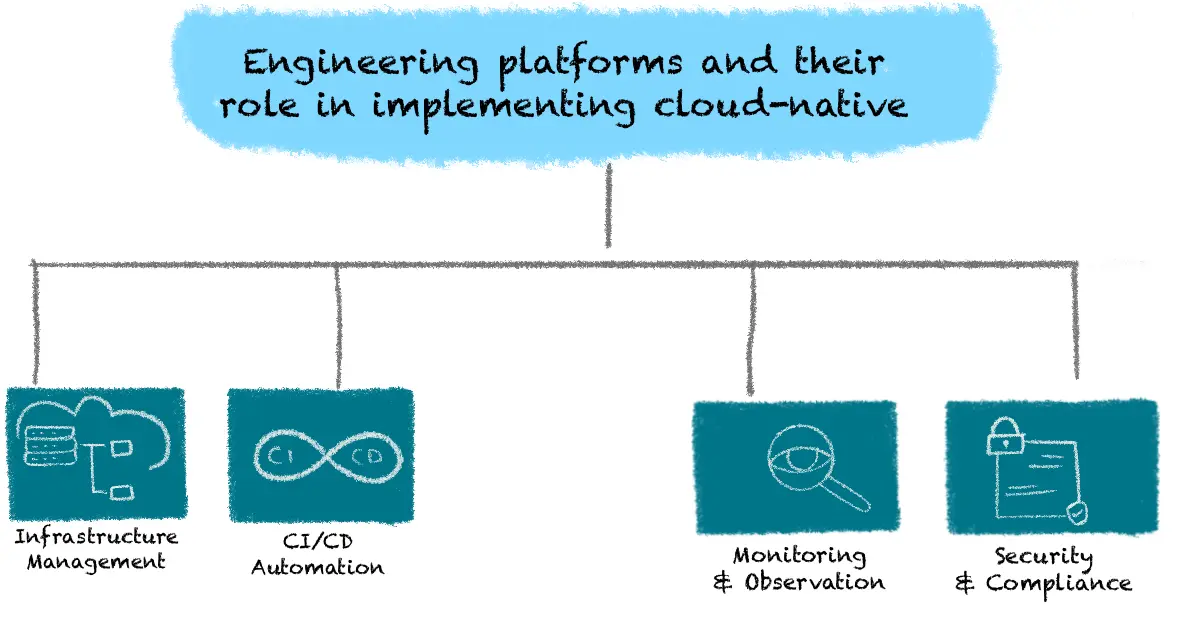

Engineering Platforms and Their Role in Implementing Cloud-Native Practices

Engineering platforms play a pivotal role in the successful implementation of cloud-native practices. These platforms provide the necessary tools, frameworks, and services to streamline the development, deployment, and management of cloud-native applications. Key roles of engineering platforms include:

Infrastructure Management:

Engineering platforms automate infrastructure provisioning and management through Infrastructure as Code (IaC) tools. This automation ensures consistent environments across development, testing, and production, reducing errors and accelerating deployment times.

CI/CD Automation:

Continuous Integration and Continuous Deployment (CI/CD) pipelines are integral to engineering platforms. These pipelines automate the process of building, testing, and deploying code, enabling faster and more reliable releases.

Monitoring and Observability:

Engineering platforms integrate advanced monitoring and observability tools to provide insights into application performance and health. This observability is crucial for detecting and resolving issues promptly, ensuring system reliability and performance.

Security and Compliance:

These platforms offer robust security features, including automated security scans, compliance checks, and access controls. This helps organizations meet regulatory requirements and protect their applications from vulnerabilities.

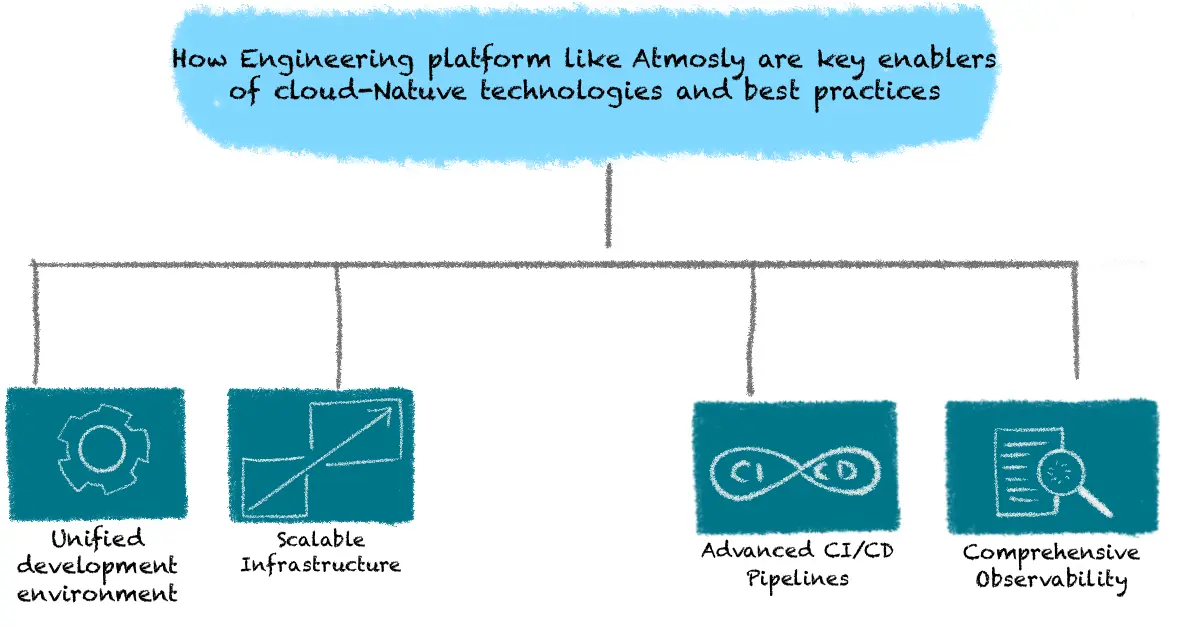

How Engineering Platforms Like Atmosly Are Key Enablers of Cloud-Native Technologies and Best Practices

Atmosly, as a comprehensive engineering platform, is a key enabler of cloud-native technologies and best practices. Here's how Atmosly facilitates cloud-native adoption:

Unified Development Environment:

Atmosly provides a unified development environment that supports the entire software development lifecycle. This environment integrates seamlessly with cloud-native tools like Kubernetes and Docker, enabling developers to build, test, and deploy applications efficiently.

Scalable Infrastructure:

Atmosly leverages cloud-native infrastructure to provide scalable resources on demand. This ensures that applications can handle varying loads without compromising performance or availability. Autoscaling features automatically adjust resources based on real-time demand, optimizing cost and performance.

Advanced CI/CD Pipelines:

Atmosly offers advanced CI/CD pipelines that automate the integration and deployment of code. These pipelines incorporate best practices such as automated testing, code quality checks, and continuous delivery, ensuring that releases are both rapid and reliable.

Comprehensive Observability:

With integrated monitoring and observability tools, Atmosly provides deep insights into application performance and health. Real-time metrics, logs, and traces help teams identify and resolve issues quickly, maintaining high availability and performance.

How Atmosly Supports Cloud-Native Principles, Such as Scalability, Resilience, and Observability

Scalability:

- Autoscaling: Atmosly supports autoscaling, allowing applications to automatically scale up or down based on demand. This ensures that applications can handle traffic spikes without manual intervention.

- Load Balancing: Built-in load balancing features distribute traffic evenly across instances, preventing any single instance from becoming a bottleneck and ensuring consistent performance.

Resilience:

- Self-Healing: Atmosly’s infrastructure includes self-healing capabilities that automatically replace failed instances, ensuring continuous availability and minimizing downtime.

- Fault Tolerance: By employing redundancy and failover mechanisms, Atmosly ensures that applications can continue to operate even in the face of component failures.

Observability:

- Real-Time Monitoring: Atmosly provides real-time monitoring of application metrics, logs, and traces. This comprehensive observability helps teams understand the internal state of their applications and infrastructure, facilitating quick issue detection and resolution.

- Alerting and Incident Management: Integrated alerting and incident management tools notify teams of potential issues, enabling proactive maintenance and faster incident response.

By leveraging Atmosly, organizations can adopt cloud-native technologies and best practices with confidence. Atmosly’s robust features and seamless integration with cloud-native tools make it an ideal platform for building scalable, resilient, and observable applications. Whether you're modernizing existing applications or developing new cloud-native solutions, Atmosly provides the tools and support needed to succeed in the dynamic cloud-native landscape.

Conclusion

The integration of cloud-native technologies into DevOps practices marks a significant evolution in software development, offering unmatched scalability, resilience, and efficiency. By leveraging tools such as Kubernetes, Docker, and Prometheus, organizations can streamline their development processes, enhance application performance, and ensure robust security and compliance. The implementation of cloud-native principles through advanced CI/CD pipelines, Infrastructure as Code, and comprehensive observability frameworks fosters a culture of continuous improvement and collaboration.

Engineering platforms like Atmosly are pivotal in this transformation. They provide the essential infrastructure, automation, and insights needed to realize the benefits of cloud-native technologies fully. Atmosly’s capabilities in scaling, self-healing, and real-time monitoring empower teams to build, deploy, and manage applications with greater agility and reliability. As organizations continue to navigate the complexities of modern software development, adopting cloud-native technologies and leveraging platforms like Atmosly will be crucial for maintaining a competitive edge and driving innovation.

In conclusion, the synergy between cloud-native technologies and DevOps practices not only addresses the challenges of traditional setups but also paves the way for a more dynamic and efficient development environment. By embracing these advancements, organizations can achieve faster development cycles, improved scalability, and enhanced reliability, ultimately delivering better products and services to their customers. Atmosly stands ready to support this journey, providing the tools and expertise necessary to thrive in the ever-evolving landscape of cloud-native development.

Book a 30-minute Atmosly demo to see pre-built CI/CD, GitOps, and observability in action